[ad_1]

Introduction

Each day, the Uber app moves millions of people around the world and delivers tens of millions of food and grocery orders. Each trip or delivery order depends on multiple low-latency and highly reliable database interactions. Uber has been running an open-source Apache Cassandra® database as a service that powers a variety of mission-critical OLTP workloads for more than six years now at Uber scale, with millions of queries per second and petabytes of data.

As we scaled the Cassandra fleet and onboarded critical use cases, we faced numerous operational challenges. This blog shows our journey on how we debugged the challenges that pop up when we run Cassandra at scale. The blog also exemplifies the power of smaller, incremental changes in service that give you compounding reliability in return at scale.

Summary

Open-source Apache Cassandra was introduced as a managed service in 2016 to power Uber’s core services. The Cassandra service grew over a period of time. As our Cassandra service grew, we were faced with daunting challenges.

This blog is divided into two sections. The first part walks through the architecture summary of Cassandra deployment within Uber; the second part talks about production challenges we faced as we scaled our Cassandra fleet and how we tackled them.

A Managed Service

Cassandra is run as a managed service by the Cassandra team within Uber, and the team is responsible for the following:

- Implement new features in Cassandra and contribute back to the community

- Integrate into Uber’s ecosystem, such as control plane, configuration management, observability, and alert management

- Critical bug fixes and contributions to the community

- Providing a one-stop shop for Cassandra as a managed solution for Uber’s application teams

- Ensure 99.99% availability and 24/7 support for Uber’s application teams

- Data modeling for our partner teams

- Guide teams on best practices while using Cassandra

Scale

- Tens of millions of queries per second

- Petabytes of data

- Tens of thousands of Cassandra nodes

- Thousands of unique keyspaces

- Hundreds of unique Cassandra clusters, ranging from 6 to 450 nodes per cluster

- Span across multiple regions

Architecture

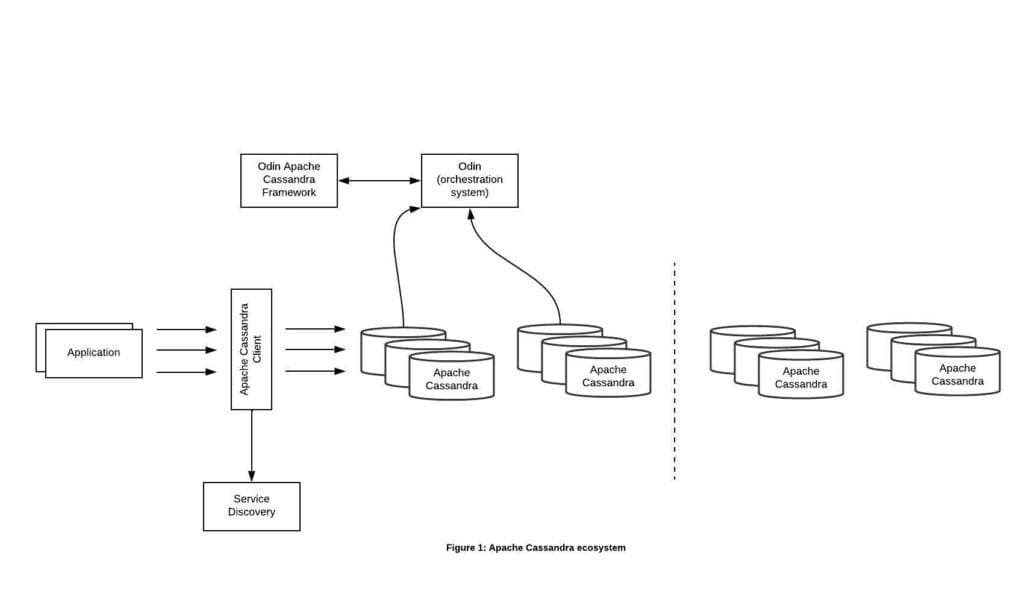

At a high level, a Cassandra cluster will span across regions with the data replicated between them. The cluster orchestration and configuration are powered by Uber’s in-house developed stateful management system, known as “Odin”.

Cassandra Framework

Cassandra framework is an in-house developed technology powered by our stateful control plane, Odin, and the framework is responsible for the lifecycle of running Cassandra in Uber’s production environment. The framework adheres to Odin’s standards and focuses specifically on Cassandra’s functionality. The framework manages all the complexity of the way Cassandra is supposed to function for one-click operations, such as seed node selection, rolling restart, capacity adjustments, node replacement, decommission, node start/stop, nodetool commands, etc.

Cassandra Client

Both Go and Java open-source Cassandra clients have been forked and integrated with the Uber ecosystem. The clients are capable of discovering the initial contact points using the service discovery mechanism, so there’s no need to hardcode the Cassandra endpoints. It also has additional observability (e.g., capturing query fingerprints, key DDL/DML features mapping, etc.) that helps debug production issues and future decision-making.

Service Discovery

Service discovery plays a crucial role in a large-scale deployment. In collaboration with Uber’s in-house service discovery and stateful platform, each Cassandra cluster is uniquely identified, and their nodes can be discovered in a real-time fashion.

Whenever a Cassandra node changes its status (such as Up, Down, Decommission, etc.), then the framework notifies the service discovery of the change. Applications (a.k.a., Cassandra clients) use the service discovery as its first contact point to connect to the associated Cassandra nodes. As the nodes change their status, the discovery adjusts the list accordingly.

Workloads

Cassandra As A Service powers a variety of workloads at Uber, and each workload serves unique use cases at Uber. The workloads vary from read-skewed to mixed to write-skewed. Thus, it is impossible to characterize a unique pattern given so many different use cases using Cassandra. One commonality seen is that the majority of them utilize Cassandra’s TTL (time-to-live) feature.

Challenges at Scale

Since the Cassandra service’s inception at Uber, it has continued to grow year-over-year, and more critical use cases have been added to the service. As we scaled, we were hit with significant reliability challenges. Some of the challenges are highlighted below.

Unreliable Node Replacement

Reliable node replacement is an integral part of any large-scale fleet. Daily node replacement predominantly occurs due to the following two reasons:

- Hardware failures

- Fleet optimization

There are less common situations that trigger substantial node replacement, such as changes to the deployment topology or disaster recovery.

Cassandra’s graceful node replacement can be done simply by decommissioning the existing node (a.k.a., nodetool decommission) followed by adding a new node. But we faced a few hiccups:

- Node decommission stuck forever

- Node addition failing intermittently

- Data inconsistency

These issues do not occur with every single node replacement, but even a smaller percentage has the potential to stall our entire fleet, which adds piles of operational overhead on teams. As an example, a success rate of 95% means 5 failures out of 100 node replacements. Supposedly, if we have 500 nodes getting replaced every day, then 25 manual operations, which is equivalent to 2 engineers dedicated only to recovering from these ad-hoc failures. Over the period of time, the problem would become so severe that we would have had to pause most of our automation, which would stall other initiatives; we could only continue emergency operations that could not be avoided, such as hardware failure.

This is how we attacked this primary problem.

Issues Due to Cassandra Hints

Cassandra does not clean up hint files for orphan nodes–say a node N1 is a legit node, and it has stored hint files locally for its peer node that was part of the Cassandra ring in the past, but not anymore. In this case, also, node N1 does not purge the hint files. To add more to the pain, when N1 decommissions then, it transfers all these orphan hint files to its next successor. Over the period of time, the (orphan) hint files kept growing, resulting in terabytes of garbage hint files.

Adversely, the decommission code path has a rate limiter, and the speed of the limiter is inversely proportional to the number of nodes. So, in a really large cluster, if a decommissioning node needs to transfer all its hint files, say in terabytes, to its successor, then it could take multiple days.

To solve this problem, we changed a few things in Cassandra and the ecosystem:

- Proactively purged the hint files belonging to orphan nodes in the ring

- Dynamically adjusted the hint transfer rate limiter (hinted_handoff_throttle_in_k), so in case of a huge backlog, the hint transfer would finish in hours instead of lingering for days

This has improved our node replacement reliability significantly and reduced the replacement time by order of magnitude, only to realize that we were hit with the same problem again.

Confusion arose in the team about whether the above solution worked or not. Upon digging further, we discovered that the decommission step intermittently errors out, and the most common reason was some other parallel activity, such as rolling restart due to regular fleet upgrades.

Unfortunately, the control plane cannot probe Cassandra about the decommissioned state, as there are no such JMX metrics exposed. A similar pattern was observed as part of the node bootstrap phase. We found a gap here and decided to improve the Cassandra bootstrap and decommission code path by exposing the state through JMX. With this additional knowledge, our control-plane layer can probe the current status of the decommission/bootstrap phase, and it can take necessary action instead of just blocking forever.

With the above change and a few more fixes, our node replacement became 99.99% reliable and completely automated, and the median replacement time was reduced significantly. Due to these improvements, our automation, as of today, is already replacing tens of thousands of nodes in just a few weeks!

The Error Rate of Cassandra’s Lightweight Transactions

There are a few business use cases that rely on Cassandra’s Lightweight Transactions pretty heavily, and that too at scale. Those cases suffered higher error rates every other week due to the following reason:

It was a general belief that Cassandra’s LWT was unreliable. To combat this degradation of our business once and for all, our team started a focused effort.

The above error can occur in case of more than one pending range, and one of the possibilities is somehow, we trigger multiple node replacements at the same time. We did a thorough analysis of our entire control plane to check for such a scenario and fixed a few corner cases. Eventually, we exhausted all our control-plane scenarios. Then we moved our focus to Cassandra, and further analysis revealed that only one Cassandra node believes in two token range movements; however, the majority believes there is only one. We added a metric in Cassandra followed by an alert to probe various stats and logs when the issue happens, and we were lucky to catch the following Gossip exception, thrown due to the failure of DNS resolution in code, on the culprit node:

The above exception led us to the root cause, which was when a new node (N2) is brought up as part of the replacement, then we need to supply the leaving nodes’ host-name (N1) to Cassandra JVM as cassandra.replace_address_first_boot=N1. Even after N2 successfully joins the ring, the Gossip code path on N2 continues to resolve N1’s IP address, and as expectedly, at some point, N1’s DNS resolution would throw an exception leaving Cassandra’s caches out of sync with other nodes. At this point, the node restart (N2) is the only solution.

We improved the error handling inside the Gossip protocol; as a result, Cassandra’s LWT became robust, and what used to be the issue every couple of weeks is no longer seen even once in the last twelve months or so!

Repair is Like Compaction

One complaint we have heard from our stakeholders is data inconsistency due to data resurrection, and it was due to a sluggish Anti-entropy (Cassandra repairs).

Anti-entropy (Cassandra repairs) is important for every Cassandra cluster to fix data inconsistencies. Frequent data deletions and downed nodes are common causes of data inconsistency. There are a few open-source orchestration solutions available that trigger repair externally. But at Uber, we wanted to rely less on a control plane-based solution. Our belief was that the repair activity should be an integral part of Cassandra itself, very much like Compaction. Keeping that goal in mind, we embarked on our journey to have the repair orchestration inside Cassandra itself that will repair the entire ring one after another.

At a higher level, a dedicated thread pool is assigned to the repair scheduler. The repair scheduler inside Cassandra maintains a new replicated table under system_distributed keyspace. This table maintains the repair history for all the nodes, such as when it was repaired the last time, etc. The scheduler will pick the node(s) that ran the repair first and continue orchestration to ensure each-and-every table and all of their token ranges are repaired. The algorithm is also capable of running repairs simultaneously on multiple nodes and also splits the token range into sub-ranges with the necessary retry to handle transient failures. Over the period, the automatic repair has become so reliable that it runs as soon as we start a Cassandra cluster, like Compaction, and does not require any manual intervention.

Due to this fully automated repair scheduler inside Cassandra, there is no dependency on the control plane, which reduced our operational overhead significantly. This automated repair scheduler brought down our p99 repair duration from tens of days to just under a single-digit number of days!

Conclusion

In this article, we have showcased Cassandra’s genesis at Uber and the importance of incremental changes to stabilize a large-scale fleet. We have also taken a deep dive into the architecture and explained how the entire Cassandra service was designed and has been running successfully for so many years without impacting stringent SLAs. In the next part of this series, we will focus on how we are taking Cassandra’s reliability to the next level.

Header Image Attribution: The “Wolverine Peak summit ridge” image is covered by a CC BY 2.0 license and is credited to Paxson Woelber.

[ad_2]

Source link