[ad_1]

Overview

With the evolution of storage table formats Apache Hudi®, Apache Iceberg®, and Delta Lake™, more and more companies are building up their lakehouse on top of these formats for many use cases, like incremental ingestion. But the speed of upserts sometimes is still a problem when the data volumes go up.

In storage tables, Apache Parquet is used as the main file format. In this article, we will discuss how we built a row-level secondary index and the innovations we introduced in Apache Parquet to speed up the upsert data inside a Parquet file. We will also demonstrate benchmarking results that show much faster speeds than traditional copy-on-write in Delta Lake and Hudi.

Motivation

Efficient table ACID upsert is critical for today’s lakehouse. Important use cases, such as Data Retention and Change Data Capture (CDC), rely heavily on it. While Apache Hudi, Apache Iceberg, and Delta Lake are widely adopted for these use cases, the upserts slow down when the data volume scales up, particularly for copy-on-write mode. Sometimes, the slow upserts become time- and resource-consuming tasks or even blockers to finishing the task on time.

To improve the speed of upserts, we introduced partial copy-on-write within Apache Parquet files with row-level index, which can skip unnecessary data pages (the smallest storage unit in Apache Parquet), reading and writing efficiently. The term “partial” here means only performing upserts for the related data pages inside a file but skipping the unrelated ones. Generally, only a small portion of the file needs to be updated, and most of the data pages can be skipped. We have observed increased speed when compared with the copy-on-write in Delta Lake.

Copy-On-Write in LakeHouse

In this article we use Apache Hudi as an example, but the similar idea can apply to Delta Lake and Apache Iceberg. Apache Hudi supports two types of upserts: copy-on-write and merge-on-read. With copy-on-write, all the files that have the record within the scope of updates are rewritten to new files and then new snapshot metadata is created to include the new files. In contrast, merge-on-read just adds delta files for the update and leaves it to the reader to merge it. Some use cases like Right to be Forgotten generally use copy-on-write mode because it relieves the pressure for reading.

The diagram below shows an example when a partitioned table is updated for one field. From a logical view, the email field for User ID1 is replaced with a new email and no other fields are changed. Physically, the table data is stored on disk as individual files, and in most cases those files are grouped as partitions based on time or other partitioning mechanisms. Apache Hudi uses an indexing system to locate the impacted files in each partition, then reads them completely, updates the email fields in memory, and finally writes to disk and forms new files. The red color in the diagram shows new files that are rewritten.

As mentioned in the blog ‘Building a Large-scale Transactional Data Lake at Uber Using Apache Hudi’, some of our tables received updates that were spread across 90 percent of the files, resulting in data rewrites of around 100 TB for any given large-scale table in the data lake. So the speed of copy-on-write is critical to many use cases. Slow copy-on-write could not only cause the job to run longer but also consume more compute resources. In some use cases, we see a significant amount of vCore being used, which is equivalent to millions of dollars spent.

Introduce Row-Level Secondary Index

Before discussing how we can improve copy-on-write within Apache Parquet, we would like to introduce the Parquet row-level secondary index, which we use to locate the data pages in Parquet to help accelerate copy-on-write.

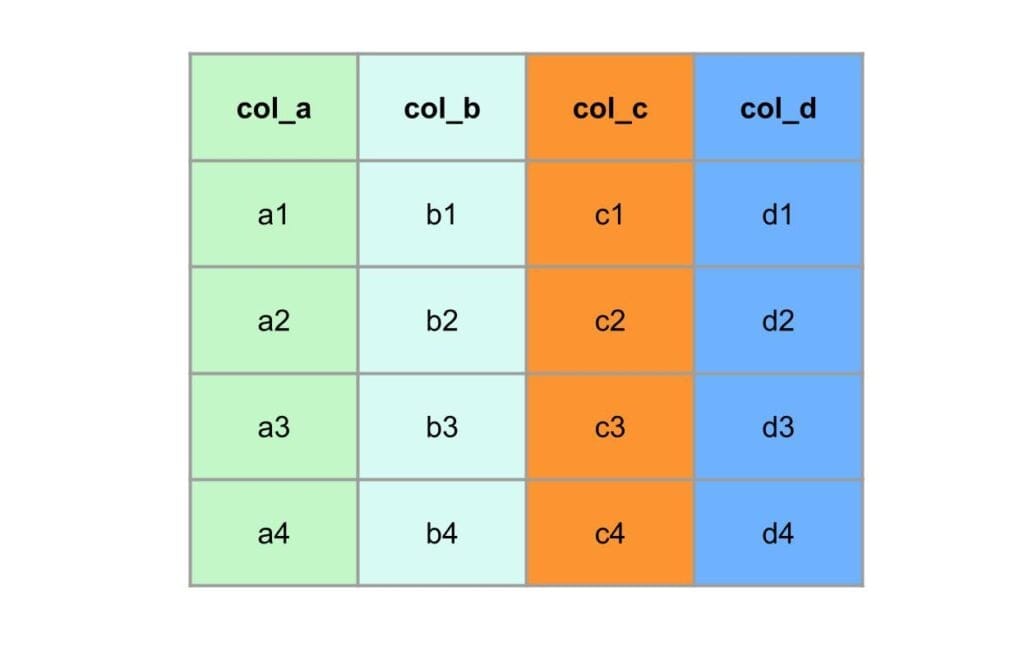

The Parquet row-level secondary index is built when a Parquet file is first written or through offline reading of Parquet files. It maps the record to [file, row-id] instead of just [file]. For instance, the RECORD_ID can be used as the index key and FILE and Row_IDs are used to point to files and the offset of each file.

Inside Apache Parquet, data is partitioned into multiple row groups. Each row group consists of one or more column chunks which correspond to a column in the dataset. The data for each column chunk is then written in the form of pages. A block is composed of pages, which are the smallest unit that must be read fully to access a single record. Inside the page, except for the dictionary page encoded, each field is appended with value, repetitive level, and definition level.

As described in the above picture, each index points to the rows inside a page where that record exists. With the row-level index, when an update is received we can quickly locate not only which file, but also which data pages need to be updated. This would help us skip all other pages that don’t need to be updated and save a lot of compute resources to speed up the copy-on-write process.

Copy-On-Write within Apache Parquet

We introduced a new way to perform copy-on-write within Apache Parquet to enable fast upserts for Lakehouse. We only perform copy-on-write updates for the related data pages inside a Parquet file, but skip the unrelated ones by just literally copying as bytebuffer without any change. This reduces the amount of data that needs to be updated during an upsert operation and improves performance.

We demonstrated the new copy-on-write process as a comparison with the traditional one. In the traditional Apache Hudi upserts, Hudi utilizes the record index to locate the files that need to be changed, then read the file record by record into memory, and then search the record to be changed. After applying the change, it writes the data to disk as a whole new file. During this read-change-write process, there are expensive tasks (e.g., de(re)-compression, de(re)-encoding, record de(re)-assembling with repetition level, definition level, etc.) that would consume a huge amount of CPU cycles and memories.

To improve this time- and resource-consuming process, we use row-level index and Parquet metadata to locate the pages that need to be changed. For those pages that are not in the scope of changes, we just literally copy the data to the new file as a bytebuffer without de(re)-compression, de(re)-encoding, or record de(re)-assembling. We call it the “copy & update” process. It is described in more detail in the following diagram.

Benchmarking Results

We conducted benchmarking tests to compare the performance of our fast copy-on-write approach with traditional approaches (such as Delta Lake) with TPC-DS data.

We set up the test with out-of-box configurations by using the TPC-DS sales data with the same number of vCores and the memory settings of Spark jobs. We chose some percentage of data from 5 to 50% to be updated, then compared the consumed time for Delta Lake and the new copy-on-write. We identify 50% as a maximum is sufficient for real use cases.

The test results show that the new approach can achieve speeds that are significantly faster. The gained performance is consistent when the percentage of updated data.

Disclaimer: Benchmark on DeltaLake used the default out-of-box configurations.

Conclusion

In conclusion, efficient ACID upserts are critical for today’s data lakehouse. While Apache Hudi, Delta Lake, and Apache Iceberg are widely adopted, the slowness of upserts remains a challenge, particularly when the data volume scales up. To address this challenge, we introduced partial copy-on-write within Apache Parquet files with row-level indexing, which can skip unnecessary data pages reads and writes efficiently. We have demonstrated that this approach can significantly improve the speed of upserts. Our approach enables companies to efficiently perform data deletion and CDC, and other important use cases that rely on efficient table upserts in the lakehouse.

Future Work

We plan to integrate the row-level index and fast copy-on-write feature to Apache Hudi, on which Uber’s data Lake House is built. We will see how this integration will improve the performance of Apache Hudi and help our customers with issues like incremental ingestion. Stay tuned!

[ad_2]

Source link