[ad_1]

Introduction

In this blog, we will share the details of our journey on Data Lifecycle Management (DLM) at Uber. We’ll start with some of the initial work, our realization of the need for a unified system, the different aspects of such a system, and the architecture to realize it. Finally, we’ll wrap up with some forward-looking views for Uber’s DLM System.

Data Lifecycle Operations

There are many operations applicable at different lifecycle stages of data, which are referred to as Data Lifecycle Operations.

The lifecycle operations encompass a wide variety, including but not limited to:

- Operations applicable based on the age of data (e.g., move data not accessed for X months to cold storage, delete all data older than Y years)

- Operations applicable based on the type of data (e.g., delete all user Personal Identifiable Information (PII) corresponding to deleted user accounts, apply Access Control for all sensitive data)

- Operations applicable on a periodic basis (e.g., weekly backup of identified tables)

- Operations applicable on an on-demand basis (e.g., deletion of user data when user exercises ‘right to be forgotten’)

Data Lifecycle Management (DLM)

Data Lifecycle Management (DLM) involves the management of these Lifecycle Operations. This involves categorization of data, authoring lifecycle policies, automation of the various different actions, and monitoring for policy conformance.

Importance of DLM

DLM is critical to any organization to meet the 3 key goals of Compliance, Cost Efficiency, and Data Reliability.

| Key Goal | Benefits |

| Compliance Requirements (e.g., GDPR, SOX, HIPAA) | Improve the organization’s compliance and risk posture

Enable the organization to operate globally/locally with minimum disruptions to the business, and avoid penalties from a regulatory and compliance standpoint (penalties amounting to several million USD) |

| Cost Efficiency (e.g., timely deletion of old, unused data, migrating data to the appropriate storage tier based on access patterns) | Deletion of old unused data of several PB each month, leading to storage cost savings of several million USD per year

Storage of data in appropriate storage classes (hot, warm, cold) in order to optimize on storage cost, thereby saving several million USD per year |

| Data Reliability | Ensure backups for data recovery in unexpected scenarios including data corruption and accidental data deletion |

Background & Motivation

Data at Uber

Data and Data Lifecycle Operations at Uber present some very unique and complex challenges, namely:

- Supporting wide range of Data Lifecycle Operations for a variety of datastores used by Uber, ranging from:

- Offline Data Stores like HDFS

- Online Data Stores such as MySQL, Schemaless, Cassandra, DocStore

- Cloud Data Stores from Public Cloud Providers (Cloud Object Storage in AWS/GCP)

- Scale of Data and datasets

Uber is well-known for its scale in the industry, so much so that “Uber scale” is synonymous with huge scale.- Uber has one of the largest on-premise Hadoop Data Lakes in the industry with over 1EB of data

- Uber has 500K+ Hive tables, and growing

We need to build a system that scales to support execution of various lifecycle operations across all datasets on different datastores.

Where We Started

Lack of Holistic Approach

Individual teams built their own solutions for a few specific Data Lifecycle Operations.

Some of the early efforts by the individual teams led to some very specific implementations/automations. For example, individual teams built their own versions of old unused data deletion for the specific storage technologies supported by their team, or each individual team had a different concept and mechanism for identifying and moving data across different storage tiers.

Siloed Execution

Different requirements were handled in unique ways across teams.

Some of the individual teams had specialized requirements in terms of requiring approvals for certain destructive operations (like old unused data deletion), or in terms of monitoring (like the progress of retrospective application of a particular lifecycle operation). Again, these were handled in a localized manner, with each team determining the best local-optima approach and building a process or automation around it.

Evolution of Requirements

Some of these requirements evolved over time:

- With improved understanding of the underlying compliance requirements (evolution of privacy policies around the world)

- With support for newer storage features (such as Erasure Coding)

Fundamental Concepts

Dataset Metadata

The dataset metadata defines the characteristics of the dataset. This includes:

- Age of data

- Type of data–whether PII, sensitive,or non-sensitive

- Current location of data in terms of storage tier

Lifecycle Policy

The lifecycle policy defines the intended action to be taken upon datasets with certain characteristics. For example:

- Delete all data after d days of creation time

- Encrypt all columns with PII data

- For all data older than m months and having less than lower_threshold number of accesses, move it from the hot to warm storage tier

Realization: Need for a Unified system

To start with, the scope of DLM was to execute actions based on manual identification (e.g., for table t, delete data after d days of creation time). However, over time, we required a way for the system to automatically determine the relevant actions for different datasets.

This led to the creation of Lifecycle Policies, which define the policy action based on dataset metadata.

Next, there was a need for a unified system that could handle different policies for different datasets. This was to ensure that:

- Among all applicable policies for a dataset, the right set of policies are selected for policy action execution

- Similar policies are applicable for datasets across datastores, so it is desirable to centralize the policy determination logic and enable common monitoring of the policy execution

Beyond just the execution of lifecycle operations, the associated steps of the dataset onboarding process need to be integrated with the system, such as candidate dataset selection, approval process (since we are performing potentially destructive operations on existing datasets, which are used by existing systems and use cases)

Scope & Challenges

What Does Data Lifecycle Management Entail?

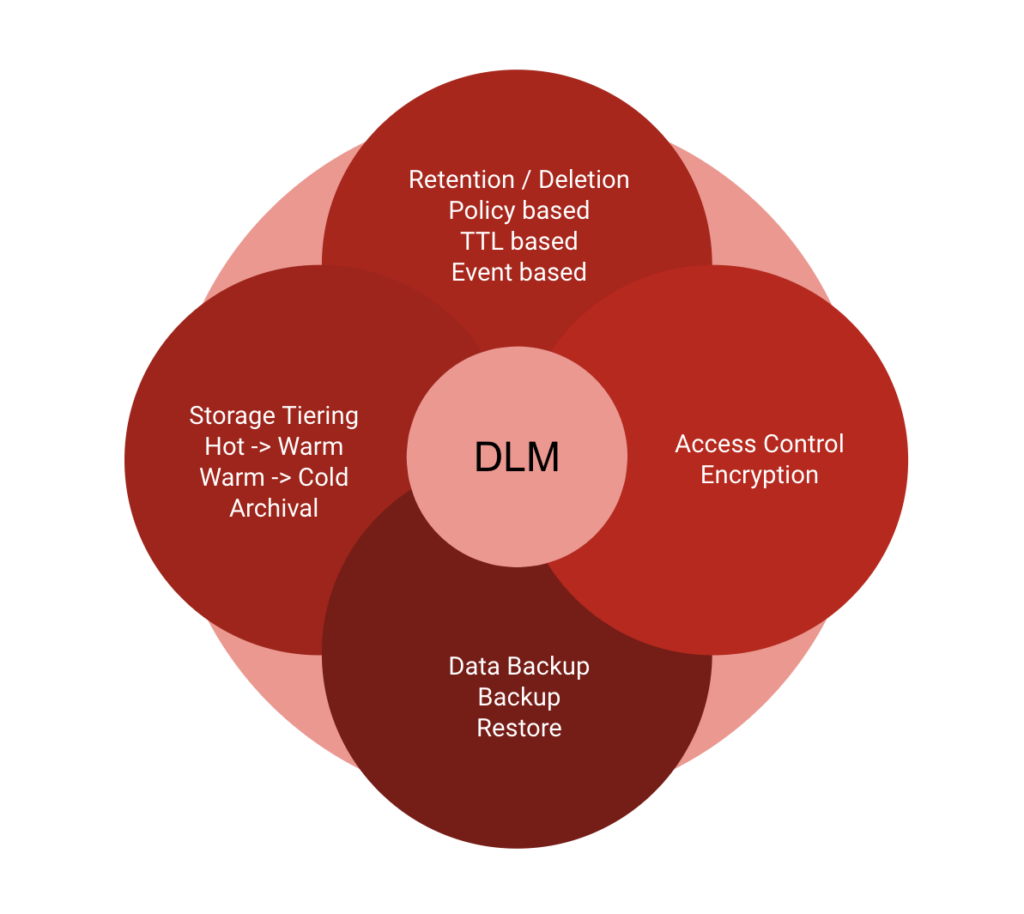

We now envision the DLM system as a unified system encompassing the entire range of data lifecycle operations, which form the end-to-end management of data.

A unified DLM system determines, onboards, executes, monitors, and audits different data lifecycle policies across multiple datastores.

DLM Themes

Policy Management

Policy management involves defining the business/compliance policy on a dataset, so that it can be checked for applicability to a dataset, be deconflicted with other policies in the system (to determine point-in-time applicability of the policy action for a particular dataset), and the applicable policies should be queryable by other systems.

SideNote: What is policy deconfliction? Why is it required?

Policies can be conflicting in terms of the action, such as retention vs. deletion (e.g., retention of financial transaction data vs. deletion of user PII, legal hold retention vs. deletion for cost savings), archival vs. deletion (e.g., archival for compliance vs. deletion for cost savings). In this scenario, the key is to identify policy precedence and which policy supersedes the other.

Policies can also be conflicting in terms of multiple policies applicable at the same time, such as hot → warm storage tiering, backup, and deletion of stale data. In this scenario, we need a prioritization of the policies to know which policy should be applied first vs. next.

Dataset Onboarding

If a dataset is identified as a candidate for one or more policies managed by the system, then the dataset may be onboarded for processing of Data Lifecycle Operations. Onboarding may require approvals, if any, and track them to completion.

SideNote: Why are approvals required for few policies?

A few policy actions may be destructive in nature (such as resulting in data deletion or access changes), so we need to ensure that the dataset owners and users are aware of the destructive nature of the policy action and that the systems/processes having dependencies on these datasets are capable of handling such a change.

Once ready and approved, the dataset may now be onboarded (allowed or exempted) for processing on the particular policies.

Policy Execution

Policy Action Execution is the step of actually triggering and tracking the policy actions to completion. This can be a single action for a policy or may involve multiple sequential or parallel actions like candidate identification, followed by owner approval notification and then deletion, corresponding to the deconflicted policy applicable on the dataset, if any, at that point in time.

In rare scenarios, Rollback of policy action may be required, owing to inadvertent errors from users in approving a dataset for a policy, or in the wrong choice of policy application configuration (such as setting an incorrect TTL value).

- Recover data after deletion – This can happen in multiple cases like wrong TTL configuration by the user, TTL enforcement initiatives to save cost or to mitigate unforeseen downstream impact. To support this, we follow a two-phase delete: soft-delete into the stash location for a pre-defined duration, after which the data is hard-deleted.

- Reverse tiering from hot → warm or warm → cold – This is required to help balance load on the warm or the cold storage tiers and move frequently accessed data back to hot tier.

Policy Monitoring

Monitoring of the Data Lifecycle Operations should cater to the different personas who are interested in tracking them.

Monitoring encompasses:

- Policy-level monitoring, for the policy/business owner to track the policy-funnel for a particular policy

- # of potential candidate datasets

- # of approved datasets

- # of onboarded datasets

- # and which datasets are executed within SLA for this policy

- # and which datasets are not executed within SLA for this policy

- Dataset-level monitoring, for the dataset owner/users to track policy applicability and past execution status at a dataset level, which can be shown on the relevant UI

- Applicable policies

- Approval & policy-onboarding status

- History of past few policy actions

- Execution operation status for the last n days to know if the dataset is in SLA

Auditing

Involves auditing all the actions of the system, including candidate identification, approvals, onboarding, and policy action details (like policy action type, trigger time, trigger parameters, completion time, completion status, operation details like steps performed and partitions processed, failure reason, etc.).

DLM Dimensions

Lifecycle Policies

There are a variety of Data Lifecycle Policies which cater to different end-goals.

Below is an indicative list of such lifecycle policies:

| Policy | Goal |

| Policy based retention / deletion User-hold retention User-data deletion Time-based deletion (for PII and sensitive data) |

#Compliance |

| Policy based encryption | #Compliance |

| TTL deletion Partition TTL Table TTL |

#CostEfficiency |

| Tiering Hot → Warm, Warm → Cold Archival |

#CostEfficiency |

| Backup & Restore (point-in-time snapshots) |

#Reliability |

Datastores

We have multiple active datastores in Uber, for which one or more Lifecycle Policies are required. Below is an indicative list of such datastores:

- Big-data Datastores such as Hive, HDFS

- Storage-Infra Datastores such as MySQL, Cassandra

- Public Cloud such as Azure Storage, Google Cloud Storage or AWS S3 Storage

Dependencies

Metadata Source

The DLM System depends upon a Metadata Source, which can serve as a source of truth for the classification and tagging of the information in the datasets.

At Uber, we leverage a centralized Metadata platform, which serves as a single source of truth for all dataset metadata.

Business Policies

The business or compliance policies which are required to be adhered to, are an important input to the DLM System. These are then represented in an appropriate form, for purposes of defining the policy rules, codifying the applicability, and specifying the policy action.

Architecture

Service Architecture

The high-level architecture of the DLM Service is shown above.

| Characteristic | Details |

| Sources | The DLM Service relies on two inputs to the system:

The Metadata Source System to provide the dataset metadata The Policy Store as the source of all relevant Data Lifecycle Policies |

| Policy Determination | For each dataset, the DLM Service determines the relevant applicable policies (after deconfliction) |

| Dataset Onboarding | For each policy, the candidate datasets are identified and onboarded with an approval process, if required |

| Policy Action | The DLM Service executes the policy action on the dataset using the appropriate DataStore Plugin |

| Monitoring | The DLM Service has an independent module to monitor at a policy level, and uses the policy-action-status history to monitor at the dataset level |

| Audit | All actions of the DLM Service are audited for investigation/audit purposes |

Salient Aspects

Workflow Orchestration Engine

The Processing Elements are implemented as Workflows in Cadence. Cadence is a distributed, scalable, durable, and highly available workflow orchestration engine to execute asynchronous long-running business logic in a scalable and resilient way.

Internal Entity Store

The Internal Entity Store is powered by DocStore. DocStore is an Uber-internal distributed general-purpose SQL database that provides strong consistency guarantees and can scale horizontally to serve high-volume workloads.

Additional Considerations

Leveraging Existing Systems

The DLM Service enables reuse of the core of existing systems by providing specific Integration Points.

- Separation of Policy Action Execution:

For a particular policy, the policy action execution may happen outside of DLM for a specific policy/DataStore, but the Policy Management is centralized in DLM and the Monitoring is integrated with the policy execution service. For example, the Encryption action is performed by a separate service, but the Policy Management is performed by DLM and used by the Encryption service. This helps to provide a unified monitoring with Encryption action details. - Reuse of Policy Action Execution Core Logic:

The policy action can be integrated from an existing service by encapsulating it within the DataStore Plugin. For example, the User Data Deletion policy action invokes a Spark job for a specific DataStore Plugin, which internally performs the action execution. This core logic is reused by the existing service, as well as the new unified DLM service.

Extending to Ad Hoc Auxiliary Workflows

The DLM Service supports integration with ad hoc extensions, in cases of Candidate Identification or Approval Mechanism. Suitable state updates into the Internal Entity Store by the ad hoc extensions can provide the DLM Service the distinction between the dataset entities still pending on these steps, vs. the ones which have already been processed for these steps.

For example, there is a separate legacy service which front-ends the TTL deletion approval, and updates the dataset metadata with the desired TTL value which is then processed by the DLM Service.

Supporting One-Time Operations

The DLM Service can be utilized for any one-time operations, such as one-time deletion or one-time hot → warm tiering, by triggering the relevant Cadence workflows with the right dataset parameters. The Cadence workflows are structured in a manner that allows them to be triggered at a dataset level for a one-time action.

Conclusion

We are currently migrating the different Lifecycle Operations from our legacy systems to the new Unified DLM system. As we do so, we are able to extend the policies to a greater range of assets like pipelines, Google workspace, MySQL records, etc., and achieve seamless integration across different datastores, all the while providing a centralized monitoring across all the policies and datasets.

DLM has had a significant evolutionary journey, and is now recognized for its critical impact on all the 3 goals of compliance, cost efficiency, and reliability. With the unified DLM system, we have made this a single platform-service which delivers DLM at Uber scale.

[ad_2]

Source link